Sidecar Integration

Overview

The document elaborates further on OPA & Envoy setup and configuration and is intended as a guide for developers that want to integrate with Maersk Authorization Service’s OPA solution. This covers the manual integration approach, where the required helm manifests, application policies, github actions workflows need to be set up by the developer in their application repository.

Warning

🚧 WORK IN PROGRESS

Steps

1. Update application helm chart

1.1 Add initContainer to deployment kubernetes manifest of your application

initContainers:

- name: proxy-init

image: openpolicyagent/proxy_init:v8

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

# Configure the iptables bootstrap script to redirect traffic to the

# Envoy proxy on port 8000. Envoy will be running as 1111, and port

# 8282 will be excluded to support OPA health checks.

args: ["-p", "8000", "-u", "1111", "-w", "8282"]

securityContext:

capabilities:

add:

- NET_ADMIN

runAsNonRoot: false

runAsUser: 01.2 Add Envoy sidecar to deployment kubernetes manifest of your application

- name: envoy

image: envoyproxy/envoy:v1.26.3

resources:

limits:

cpu: 250m

memory: 256Mi

requests:

cpu: 250m

memory: 256Mi

volumeMounts:

- readOnly: true

mountPath: /config

name: proxy-config

args:

- "envoy"

- "--config-path"

- "/config/envoy.yaml"

env:

- name: ENVOY_UID

value: "1111"1.3 Add OPA sidecar to deployment kubernetes manifests of your application

- name: opa

image: openpolicyagent/opa:latest-envoy

args:

- "run"

- "--server"

- "--addr=localhost:8181"

- "--diagnostic-addr=0.0.0.0:8282"

- "--ignore=.*"

- "--config-file=/config/config.yaml"

livenessProbe:

httpGet:

path: /health?plugins

scheme: HTTP

port: 8282

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /health?plugins

scheme: HTTP

port: 8282

initialDelaySeconds: 1

periodSeconds: 3

env:

- name: DEPLOYMENT_NAME

value: {{ .Chart.Name }}-opa

- name: OPA_TAG

value: {{ .Values.opaTag }}

envFrom:

- secretRef:

name: {{ include "<chart-name>.fullname" . }}-ghcr

resources:

limits:

cpu: 500m

memory: 256Mi

requests:

cpu: 250m

memory: 256Mi

volumeMounts:

- mountPath: /config

name: opa-config1.4 Add configMap volumes to deployment kubernetes manifests of your application

- name: proxy-config

configMap:

name: {{ include "<chart-name>.fullname" . }}-proxy

- name: opa-config

configMap:

name: {{ include "<chart-name>.fullname" . }}-opa1.5 Create Envoy configMap

Caution

Specify correct application port!!!

apiVersion: v1

data:

envoy.yaml: |-

# envoy.yaml

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 8000

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: service

http_filters:

- name: envoy.ext_authz

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ext_authz.v3.ExtAuthz

transport_api_version: V3

with_request_body:

max_request_bytes: 8192

allow_partial_message: true

failure_mode_allow: false

grpc_service:

google_grpc:

target_uri: 127.0.0.1:9191

stat_prefix: ext_authz

timeout: 1s

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: <APPLICATION PORT>

admin:

access_log_path: "/dev/null"

address:

socket_address:

address: 0.0.0.0

port_value: 8001

layered_runtime:

layers:

- name: static_layer_0

static_layer:

envoy:

resource_limits:

listener:

example_listener_name:

connection_limit: 10000

overload:

global_downstream_max_connections: 50000

kind: ConfigMap

metadata:

name: {{ include "<chart-name>.fullname" . }}-proxy

namespace: {{ .Release.Namespace }}

labels:

{{- include "<chart-name>.labels" . | nindent 4 }}1.6 Create OPA configMap

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ include "<chart-name>.fullname" . }}-opa

labels:

{{- include "<chart-name>.labels" . | nindent 4 }}

data:

config.yaml: |-

{{- .Values.opa | toYaml | nindent 4 }}1.7 Create GHCR secret

apiVersion: v1

kind: Secret

metadata:

name: {{ include "<chart-name>.fullname" . }}-ghcr

labels:

{{- include "<chart-name>.labels" . | nindent 4 }}

type: Opaque

data:

GHCR_TOKEN: {{ .Values.ghcrToken | b64enc }}1.8 Update values.yaml

opa:

services:

ghcr-registry:

url: https://ghcr.io

type: oci

credentials:

bearer:

scheme: "Bearer"

token: "${GHCR_TOKEN}"

bundles:

authz:

service: ghcr-registry

resource: ghcr.io/Maersk-Global/<app-repo-name>-opa:${OPA_TAG}

persist: true

polling:

min_delay_seconds: 300

max_delay_seconds: 900

plugins:

envoy_ext_authz_grpc:

addr: ":9191"

path: "com/maersk/global/decision/result"

enable-performance-metrics: true

decision_logs:

console: true

status:

console: true

prometheus: true

distributed_tracing:

type: grpc

address: grafana-agent-traces.platform-monitoring.svc:4317

service_name: ${DEPLOYMENT_NAME}

sample_percantage: 1002. Define application policy

2.1 Create folder structure with following files

mas

├── cdt

│ └── data.json

├── preprod

│ └── data.json

├── prod

│ └── data.json

└── src

├── .manifest

└── policies

└── microservice.rego2.2 Update .manifest file

{

"roots": [""]

}2.5 Add base level application policy to microservice.rego

package envoy.authz

import rego.v1

# Import global authz policy

import data.com.maersk.global.authz as global

default allow := false

# Allow access to spring actuator health endpoints.

# Change based on health endpoints of the service if not spring.

allow if {

glob.match("/actuator/*", null, http_request.path)

}

allow if {

global.allow

}2.6 Define further policy checks based on requirements for the application

For more information on creating application policies, see How to create an application policy.

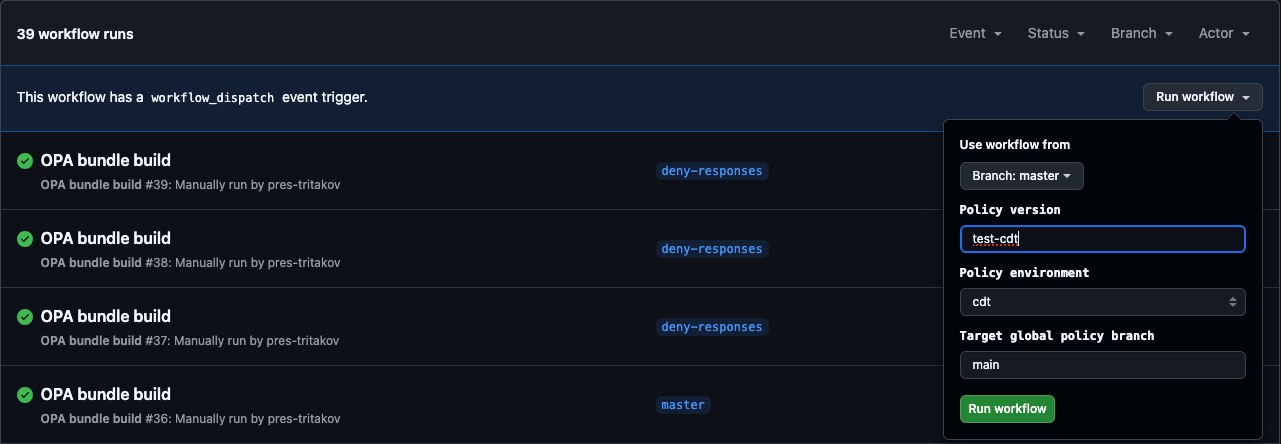

3. Set up GitHub Actions workflows

3.1 Create policy bundle build and push workflow

Reference: OPA Bundle Build Commons

name: OPA bundle build

on:

workflow_dispatch:

inputs:

version:

description: 'Policy version'

required: false

environment:

description: 'Policy environment'

required: false

default: 'cdt'

type: choice

options:

- cdt

- preprod

- prod

global:

description: 'Target global policy branch'

default: 'main'

jobs:

build:

environment: ${{ github.event.inputs.environment }}

runs-on: ${{ vars.SH_UBUNTU_LATEST }}

steps:

- name: Bundle

uses: Maersk-Global/github-actions-commons/main@opa-policy-generator

with:

checkoutToken: ${{ secrets.EE_CHECKOUT_TOKEN }}

version: ${{ github.event.inputs.version }}

environment: ${{ github.event.inputs.environment }}

globalPolicyBranch: ${{ github.event.inputs.global }}3.2 Update application helm deployment workflow

Example if using Maersk-Global/github-actions-commons/helm-upgrade action. Add organisational secret GHCR_PULL_TOKEN and OPA_TAG (version of OPA bundle) as a --set parameter to extraArgs.

extraArgs: --set ghcrToken=${{secrets.GHCR_PULL_TOKEN}} --set opaTag=${{ inputs.opaVersion }}4. Build, deploy, test

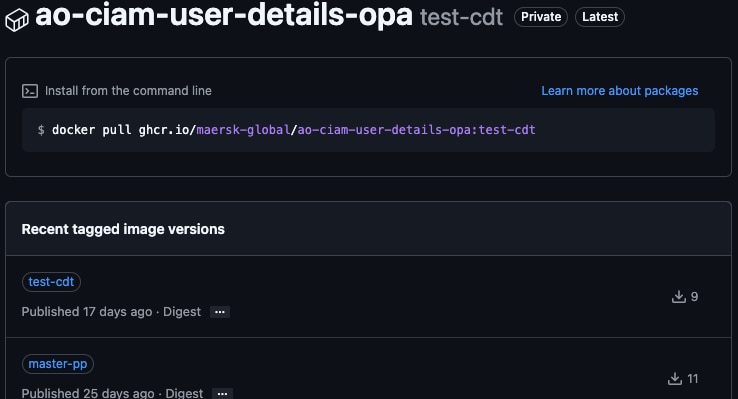

4.1 Build policy bundle

Example:

Successful Policy Build

OPA bundle in GitHub OCI Registry

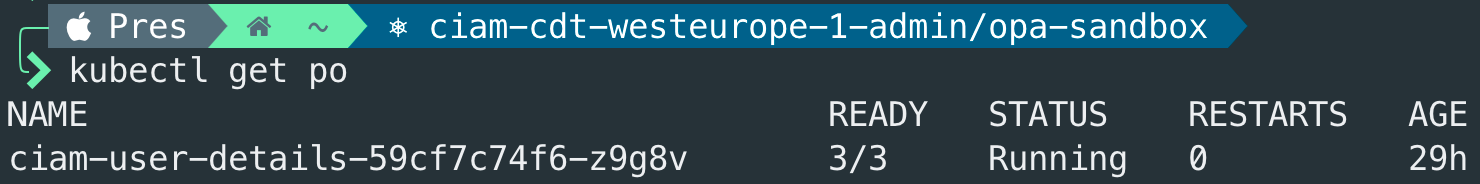

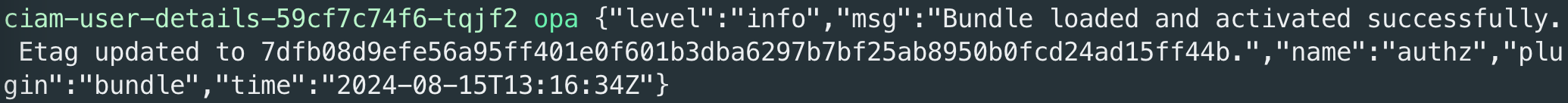

4.2 Deploy application

Example application running with OPA and Envoy:

Service running with OPA and Envoy

OPA bundle load logs

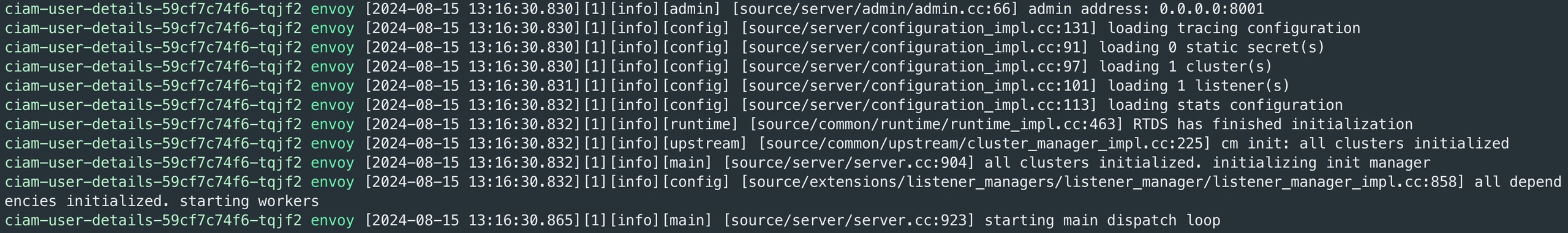

Envoy startup logs

4.3 Test

Any request to your application should now be going through Envoy and OPA for the authorization decision. OPA is preconfigured to output decision logs for the request. When a request is evaluated, a decision log with the result will be output.

Example decision log for request with INVALID ForgeRock token

{

"bundles": {

"authz": {}

},

"decision_id": "d10390ce-72b0-4a1b-ab4a-ab29cf673052",

"input": {

"attributes": {

"request": {

"http": {

"headers": {

":authority": "ciam-user-details",

":method": "GET",

":path": "/user-accounts/tritakov.pres@maersk.com/user-settings",

":scheme": "http",

"accept": "*/*",

"authorization": "Bearer eyJ0eXAiOiJKV1QiLCJraWQiOiIzaTA5WlhQbE1PTEpCYXFnSHUxK3ZRc1AwOVU9IiwiYWxnIjoiUlMyNTYifQ...",

"x-forwarded-proto": "http"

},

"host": "ciam-user-details",

"method": "GET",

"path": "/user-accounts/tritakov.pres@maersk.com/user-settings",

"protocol": "HTTP/1.1",

"scheme": "http"

}

}

}

},

"labels": {

"id": "4a27f145-8c81-49bf-84bc-6100c75f382a",

"version": "0.67.1"

},

"level": "info",

"metrics": {

"counter_rego_builtin_http_send_interquery_cache_hits": 2,

"counter_server_query_cache_hit": 0,

"timer_rego_builtin_http_send_ns": 1670704,

"timer_rego_input_parse_ns": 100300,

"timer_rego_query_compile_ns": 117801,

"timer_rego_query_eval_ns": 3181407,

"timer_rego_query_parse_ns": 70300,

"timer_server_handler_ns": 3540408

},

"msg": "Decision Log",

"path": "com/maersk/global/authz/allow",

"req_id": 10,

"requested_by": "10.1.5.153:60730",

"result": false,

"time": "2024-08-19T09:09:04Z",

"timestamp": "2024-08-19T09:09:04.825541318Z",

"type": "openpolicyagent.org/decision_logs"

}Example decision log for request with VALID ForgeRock token

{

"bundles": {

"authz": {}

},

"decision_id": "5c4d81c4-af92-4570-9bd0-ee2c8c9a9446",

"input": {

"attributes": {

"request": {

"http": {

"headers": {

":authority": "ciam-user-details",

":method": "GET",

":path": "/user-accounts/tritakov.pres@maersk.com/user-settings",

":scheme": "http",

"accept": "*/*",

"authorization": "Bearer eyJ0eXAiOiJKV1QiLCJraWQiOiIzaTA5WlhQbE1PTEpCYXFnSHUxK3ZRc1AwOVU9IiwiYWxnIjoiUlMyNTYifQ...",

"x-forwarded-proto": "http"

},

"host": "ciam-user-details",

"method": "GET",

"path": "/user-accounts/tritakov.pres@maersk.com/user-settings",

"protocol": "HTTP/1.1",

"scheme": "http"

}

}

}

},

"labels": {

"id": "4a27f145-8c81-49bf-84bc-6100c75f382a",

"version": "0.67.1"

},

"level": "info",

"metrics": {

"counter_rego_builtin_http_send_interquery_cache_hits": 2,

"counter_server_query_cache_hit": 0,

"timer_rego_builtin_http_send_ns": 1636307,

"timer_rego_input_parse_ns": 91600,

"timer_rego_query_compile_ns": 99600,

"timer_rego_query_eval_ns": 3022313,

"timer_rego_query_parse_ns": 67401,

"timer_server_handler_ns": 3340214

},

"msg": "Decision Log",

"path": "com/maersk/global/authz/allow",

"req_id": 11,

"requested_by": "10.1.5.153:43834",

"result": true,

"time": "2024-08-19T09:19:29Z",

"timestamp": "2024-08-19T09:19:29.92614041Z",

"type": "openpolicyagent.org/decision_logs"

}